In the 20th Century 80’s decade there was reborn interest in neural networks, both in academia and industry. A neural network is an algorithm that mimics the neural connections present in the neocortex. The interest was motivated by the rediscovering of algorithms to train the networks. Through training, a neural network can learn to do something. And since neural networks are implemented in computers, we have computers that can learn. This is an intellectual ability that people share with monkeys among other animals with neocortex. For this reason, neural networks are the backbone of machine learning, which according to some is part of artificial intelligence.

Neural networks can learn to classify objects and also to reproduce the behaviour of complex systems. They learn by examples. When we want to teach a neural network to differentiate between apples and oranges we have to present it examples of both fruits with a label indicating if it is an orange or an apple. The point is the neural network will be able to correctly classify oranges and apples different to the ones used during training. This is because a neural network does not perform a mere memorisation, but they are able to generalise. This is the key for learning.

But the interest in neural networks that raised up during the eighties faded as the following decade started because more promising machine learning methods appeared. However, a group of indomitable Canadian researchers managed to persevere and transformed neural networks into deep learning.

Deep learning is an algorithm family similar to neural networks, with the same aim and better performance. The number of neurons and connections is higher, but the main difference is the abstraction capacity. When we train a neural network to differentiate between apples and oranges we cannot present the items as they are, we have to extract some features that describe the oranges and apples, as the colour, shape, size, etc. To do this is what in this context we call abstraction. In contrast to neural networks, deep learning is able to do abstraction by itself. This is the reason why deep learning is thought to be able to understand what they see and heard and it is, therefore, a bridge between machine learning and artificial intelligence.

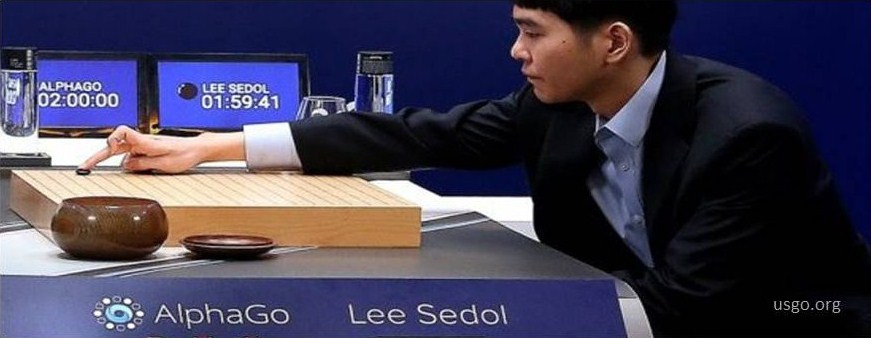

As it happened with neural networks, deep learning has gained huge interest among companies. In 2013, Facebook failed to buy company DeepMind, but Google succeeded one year later when it paid 500 million dollars for it. In case some body missed this irruption of deep learning in the media, it became mainstream in early 2016 when Google DeepMind software AlphaGo beated Lee Sedol, the go champion. This was an unprecedented technical success because go is much harder than chess. When IBM’s Deep Blue won Garry Kasparov in 1996, it used a strategy based on figuring out all the possible short-term movements. However, this strategy is not possible in go because the possibilities are infinite in comparison to chess. For this reason, Google DeepMind’s AlphaGo is not programmed to play go, it is able to learn to play by itself. The machine learns by playing many times against a human player, improving in every game until it becomes unbeatable.

Deep learning is not a secret arcane, anybody who wants to learn it can do it. There are free available tools, like Theano, TensorFlow and H2O, that allows any person with programming knowledge and the concepts in mind to try it. The company OpenAI has freely released its first algorithm, which has been made around the reinforced learning paradigm. There also companies offering commercial products onto which applications can be build. These are the cases of the Spanish Artelnics and the Californian Numenta. Deep learning is being successfully used for face recognition and verbal command interpretation.

Deep learning, besides other machine learning paradigms, could be an important innovation opportunity. It could be one of the tools to unleash the value hidden in the big data repositories. Moreover, in the industrial practice it could be used to detect and classify faults or defects, to model complex systems to be used in control schemes, and in novelty detection.

- Fermentation, travel partner - 5 July 2024

- A pilot experience with a constructed wetland - 23 August 2018

- Plastic planet - 3 July 2018