Artificial intelligence (AI) is contributing to the transformation of a large number of sectors, from suggesting a song to analyzing our health status via a watch, along with manufacturing industry. One hindrance on this transformation relates to the overall complexity of AI systems, which often poses challenges in terms of transparency and comprehensions of the results delivered. In this context, the AI’s explanatory capability (or “explainability”) is referred as the ability to make their decisions and actions understandable to users – which is known as eXplainable AI (XAI); this is something crucial to generate trust and ensure a responsible adoption of these technologies.

Explainable AI (XAI); the ability to make their decisions and actions understandable to users

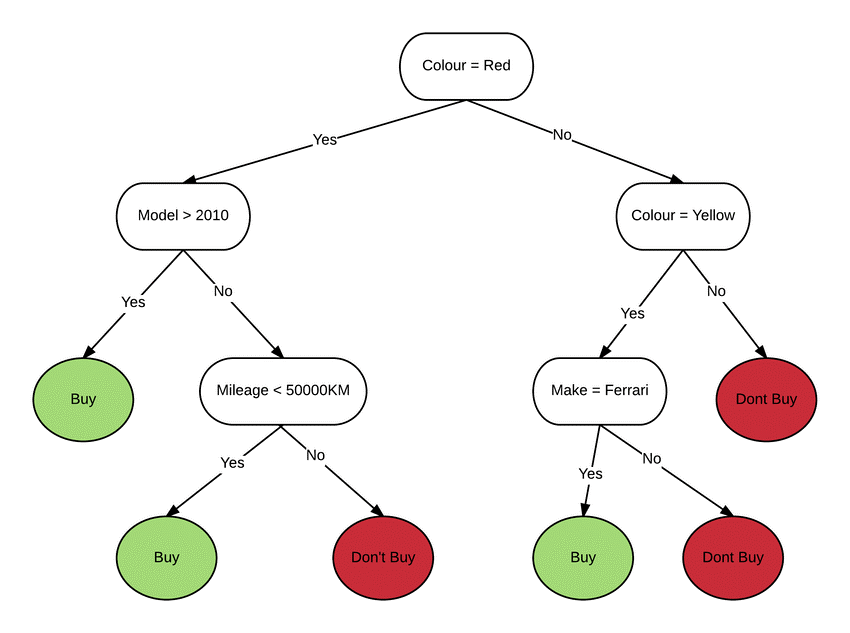

A wide range of technological solutions are currently being investigated in order to improve the explainability of AI algorithms. One of the main strategies includes the creation of intrinsically explainable models (ante hoc explanations). This type of models, such as decision trees and association rules, are designed to be transparent and comprehensible by their own nature. Their logical structure allows users to seamlessly follow the reasoning behind the AI-based decisions. Tools for visualization of AI explanations are key, since they represent graphically the decision-making process performed by the model, thus facilitating user comprehension. These tools might take different forms, such as dedicated dashboards, augmented reality glasses, or natural language explanations (as speech or as text).

Another commonly used family of explanation techniques is called post hoc methods: these consist in, once the AI model has been created and trained, a posteriori processing and analyzing this resulting model to provide explanations of the results. For example, some of these techniques evaluate how much is contributed by each input variable in the final result of the system (sensibility analysis). Among post hoc explainability techniques, SHAP (Shapley Additive exPlanations), a method based on cooperative game theory, allows to extract coefficients that determine the importance of each input variable on the final result of an AI algorithm.

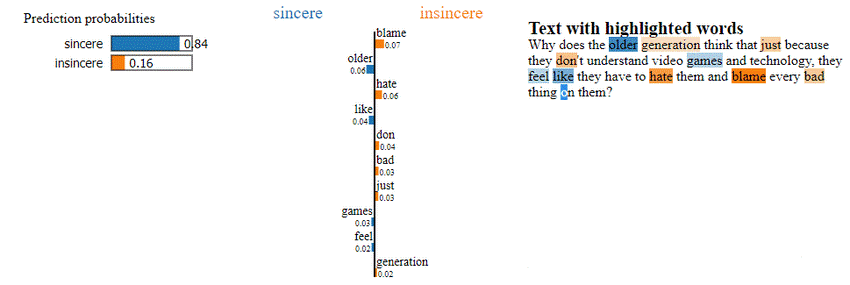

Other XAI techniques include decomposition, which divides the AI model into simpler and more easily explainable components, and knowledge distillation into surrogate models, which approximate the function of the original system while being more easily comprehensible. On the other hand, the so-called “local explanations” consist in methods that explain individual examples (input-output), not the entire AI model. An example are the explanations provided by tools such as LIME (Local Interpretable Model-agnostic Explanations). As an illustration of LIME, the example in the following figure shows a specific inference in text classification task, in which a text is classified as “sincere” (with 84% of likelihood), and the most relevant words for that decision are highlighted, as an explanation of this individual classification [Linardatos et al. (2020)].

An additional approach for XAI relates to the integration of input by users in the process of AI model construction, which is known in general as “Human-in-the-Loop” (HITL). This approach allows users to interact (e.g. by labelling new data) and to supervise the AI algorithm building process, adjusting its decisions in real time and thus improving the overall system transparency.

At CARTIF, we are actively working in different projects related with AI, such as s-X-AIPI to help advance in the explainability of AI systems used in industrial applications. A significant example in our work are dashboards (visualization or control panels) designed for the supervision and analysis of the performance of fabrication processes studied in the project. These dashboards allow plant operators to visualize and understand in real time the actual status of the industrial process.

Predictive and anomaly detection models have been created in the context of asphalt industrial processes which not only anticipate future values, but also detect unusual situations in the asphalt process and explain the factors that have an influence on these predictions and detections. Thus, this helps operators make adequate informed decisions and better understand the results generated by the AI systems and how to take proper actions.

Explainability in AI methods is essential for the safe and effective AI adoption in all types of sectors: industry, retail, logistics, pharma, construction… In CARTIF, we are committed with the development of technologies to create AI-based applications that do not only improve processes and services, but also are transparent and comprehensible for users; in short, that are explainable.

Co-author

Iñaki Fernández. PhD in Artificial Intelligence. Researcher at the Health and Wellbeing Area of CARTIF.

- Behind the Curtain: Explainable Artificial Intelligence - 12 July 2024

- Digital Twin: Industry 4.0 in its digitised form - 9 October 2023

- Artificial Intelligence, an intelligence that needs non-artificial data - 16 December 2022