We are used to see how new technologies help people with physical disabilities: automatic wheel chairs, revolutionary prosthesis and even image or voice sensors directly connected to the brain through electrodes. But what about people with a mental disability? Let’s think on those persons that suffer schizophrenia. This is a chronic condition characterized by certain behaviors that are abnormal for the community. In particular, many people with schizophrenia have difficulty recognizing emotions in the facial expressions of other people, which seriously affects social behavior. Furthermore, this difficulty is not limited to schizophrenia, but is also observed in cases of mania, dementia, brain damage, autism etc.

Here come into play social robotics technologies. A social robot is a robot that interacts and communicates with people (or other robots) following social behaviors and rules. Furthermore, traditionally a robot is assumed to be materialized in the form of physical device. However, the same interaction skills designed for a physical robot can be integrated into a virtual character represented in a computer. From this viewpoint, an Avatar may be considered to be a robot, in line with the new technological paradigm in which the boundary between the physical and the virtual reality is progressively diluted.

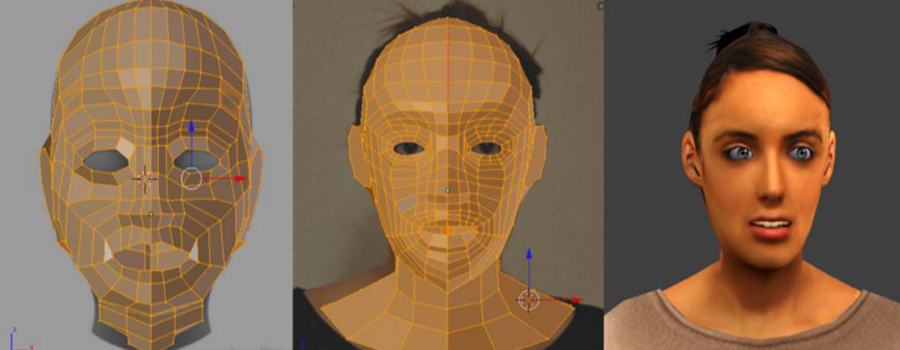

Now, what advantages does the use of Avatars in psychological and psychiatric therapies have? In my opinion, these advantages are innumerable. An avatar can reach an expressiveness level comparable (if not superior) to that of a physical robot, and even a real personal. Not even a hyper-realistic human appearance is needed: a simple cartoon can be extremely expressive. (Let’s think of the coyote when, in pursuit of the roadrunner, exceeds the limit of the cliff). In addition, unlike a real person, the expressiveness of an avatar can be controlled to the millimeter by a therapist. This way, the virtual avatar can display emotions in varying degrees, from emerging to very marked, randomly or in progression, even depending on the user behavior.

Another great aspect involved is sensorization. Here, the Computer Vision technologies play a decisive role. We are used to our mobile phone camera that detects and tracks faces, identifies which faces correspond to people in our family or social environment and determine when they open their eyes and smile. Obviously, this technology can be put at the service of perceiving the user’s attitude during interaction: whether the user smiles or is sad, if he/she is calm or nervous or feels anxious. In addition, certainly voice analysis can supplement this information. The words used by the person say a lot about his/her mood. In addition, the tone and rhythm also provide crucial information: an angry person talks fast and loud, while someone who is bored speaks slowly, in a slurred speech. Certainly, nowadays the voice analysis goes a step behind the image analysis, probably because it is very close to artificial intelligence that still represents a challenge (although increasingly affordable by technology).

Where does this lead us? To a virtual (or physical) avatar that tracks the user’s face with its eyes, interprets user emotions and reacts accordingly to them, talk friendly and can be supervised by a therapist, with the advantage of being available 24 hours day. A companion, ultimately, that serves as a personal trainer to improve the perception of human emotions. This is not the future. This is the present.

- Virtual avatar for the treatment of schizophrenia - 15 July 2016