In the new era of Industry 5.0, robots are no longer just tools for automation, they are becoming active collaborators for people. The key is no longer only about producing faster, but about building flexible, personalized, and human-centric environments. And here comes a fundamental challenge: how can we enable robots to understand and communicate with us naturally?

The answer lies in Human-Robot Interaction (HRI), a field that seeks to make machines perceive, interpret, and respond to people in an appropriate way. Yet, one of the biggest obstacles is the lack of a universal language that allows different systems and sensors to work together seamlessly

This is where ROS4HRI comes in: an open standard driven by our partner in the ARISE project, PAL Robotics. Within this ecosystem, PAL contributes its expertise in humanoid and social robotics, ensuring that ROS4HRI is validated in real environments from testing labs to productive scenarios such as hospitals and healthcare centers.

What is ROS4HRI?

ROS4HRI is an extension of ROS2 (Robot Operating System) that defines a set of standardized interfaces, messages, and APIs designed for human-robot interaction.

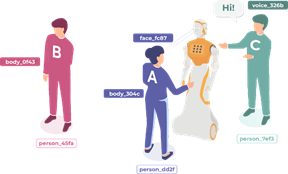

Its goal is simple: to create a common language that unifies how robots perceive and interpret human signals, regardless of the sensors or algorithms used. With ROS4HRI, robots can manage key information such as:

- Person identity: recognition and individual tracking.

- Social attributes: emotions, facial expressions, even estimated age.

- Non-verbal interactions: gestures, gaze, body posture.

- Multimodal signals:voice, intentions, and natural language commands

ROS4HRI Architecture

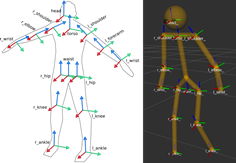

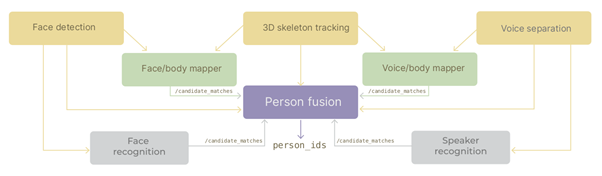

The design of ROS4HRI follows a modular approach, breaking down barriers between different perception systems. This ensures robots can process human information in a coherent and consistent way, fully aligned with the open philosophy of ROS2. Its main components include:

- Standard messages: to represent human identities, faces, skeletons, and expressions.

- Interaction APIs: giving applications uniform access to this data.

- Multimodal integration: combining voice, vision, and gestures for richer interpretation.

- Compatibility with ROS2 and Vulcanexus: enabling deployment in distributed, mission-critical environments.

You can see part of its core modules in the figure below. For more details, the code and documentation are available in the official repository: github.com/ros4hri

ROS4HRI in the ARISE Ecosystem

In the European project ARISE, ROS4HRI plays a key role within the ARISE middleware, integrating with ROS2, Vulcanexus, and FIWARE.

This powerful combination enables Industry 5.0 scenarios where robots equipped with ROS4HRI can:

- Recognize an operator and adapt their behavior based on role or gestures.

- Interpret social signals such as signs of fatigue or stress, to provide more human-aware support.

- Share information in real time with industrial management platforms, e.g. through FIWARE enriching decision-making.

What makes it even more interesting is that ROS4HRI does not operate in isolation: it leverages resources already available within the community. A great example is MediaPipe, Google’s widely used library for gesture, pose, and face recognition. With ROS4HRI, MediaPipe outputs (like 2D/3D skeletons or hand detection) can be seamlessly integrated into ROS2 in a standardized way.

A practical example

A practical example within ARISE using ROS4HRI is a module for detecting finger movements. A package was developed in ROS2 that follows the ROS4HRI standard and uses Google’s MediaPipe library to process video from a camera. The main node extracts the 3D coordinates of hand joints and publishes them in a ROS topic following ROS4HRI conventions, such as: /humans/hands/<id>/joint_states.

Thanks to this standardized format, other system components (for instance, an RViz visualizer or a robot controller) can consume this data interoperably, enabling applications like gesture-based robot control.

The evolution towards Industry 5.0 demands robots that can interact in ways that are more human, reliable, and efficient.On this path, ROS4HRI is emerging as a key standard to enable seamless human-robot collaboration ensuring interoperability, scalability, and trust. Its applications extend beyond industry, reaching into healthcare, education, and services, where the ability to understand and respond to people is essential.

References

Lemaignan, S.; Ferrini, L.; Gebelli, F.; Ros, R.; Juricic, L.; Cooper, S. Hands-on: From Zero to an Interactive Social Robot using ROS4HRI and LLMs. HRI 2025. https://ieeexplore.ieee.org/document/10974214

Ros, R.; Lemaignan, S.; Ferrini, L.; Andriella, A.; Irisarri, A. ROS4HRI: Standardising an Interface for Human-Robot Interaction.2023 PDF link

Youssef, M.; Lemaignan, S. ROS for Human-Robot Interaction. IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2021.IEEE link https://ieeexplore.ieee.org/document/9636816

Co-authors

Adrián Lozano. Researcher at the Industrial and Digital Systems Division, CARTIF

Séverin Lemaignan. Senior Scientist leading Social AI and Human-Robot Interactions at PAL Robotics, partner in the ARISE project.